Auto - Shades

Motivation

This project began as an exploration into how wearable devices can respond dynamically to both the environment and the user. My teammate and I wanted to design a pair of interactive glasses that automatically adapt to ambient light and user gestures, reducing eye strain and enhancing comfort, while still offering manual control through Bluetooth. The goal was to merge embedded systems engineering with human–computer interaction, resulting in a functional prototype that feels intuitive, responsive, and human-centered.

System Architecture

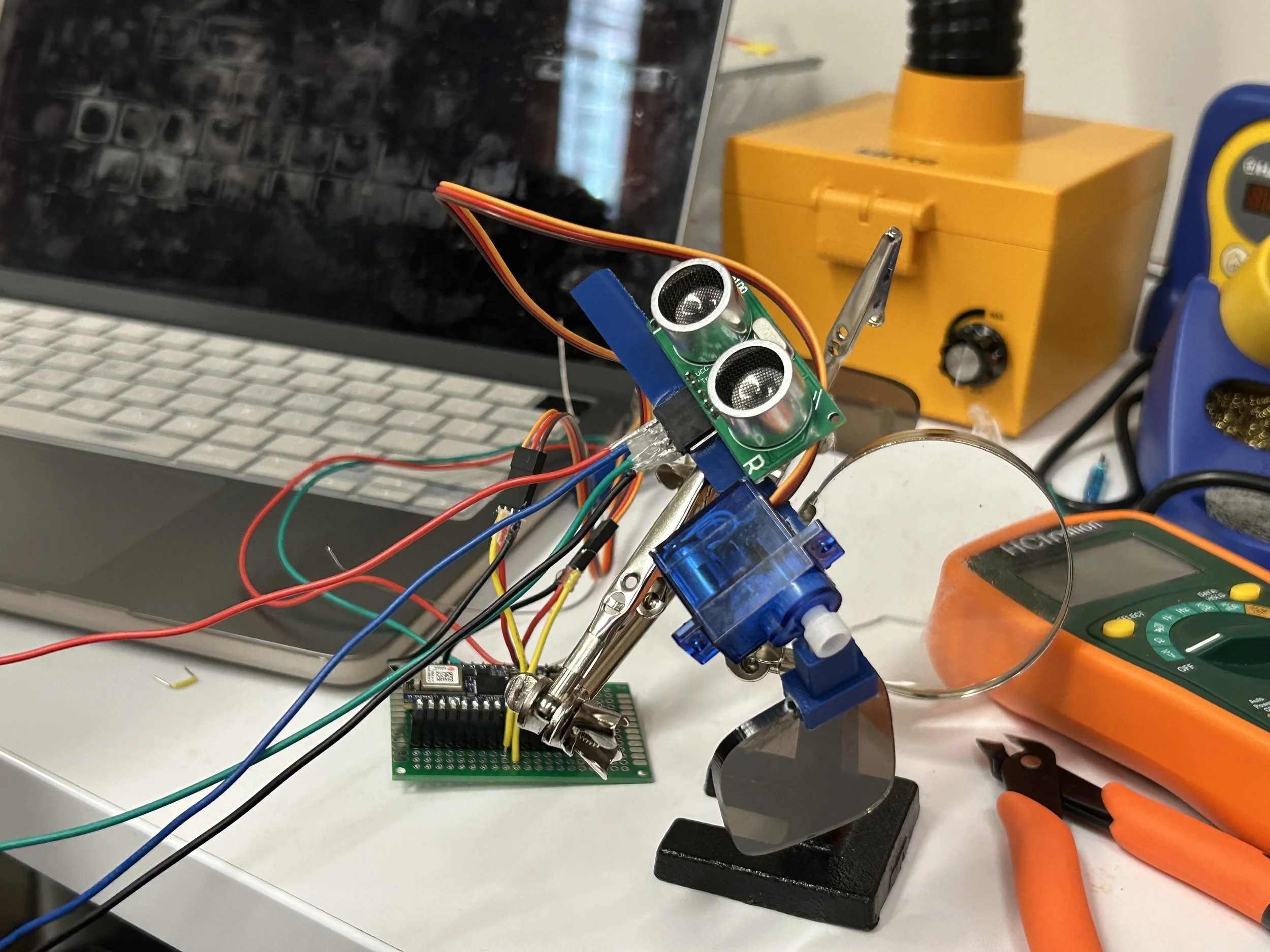

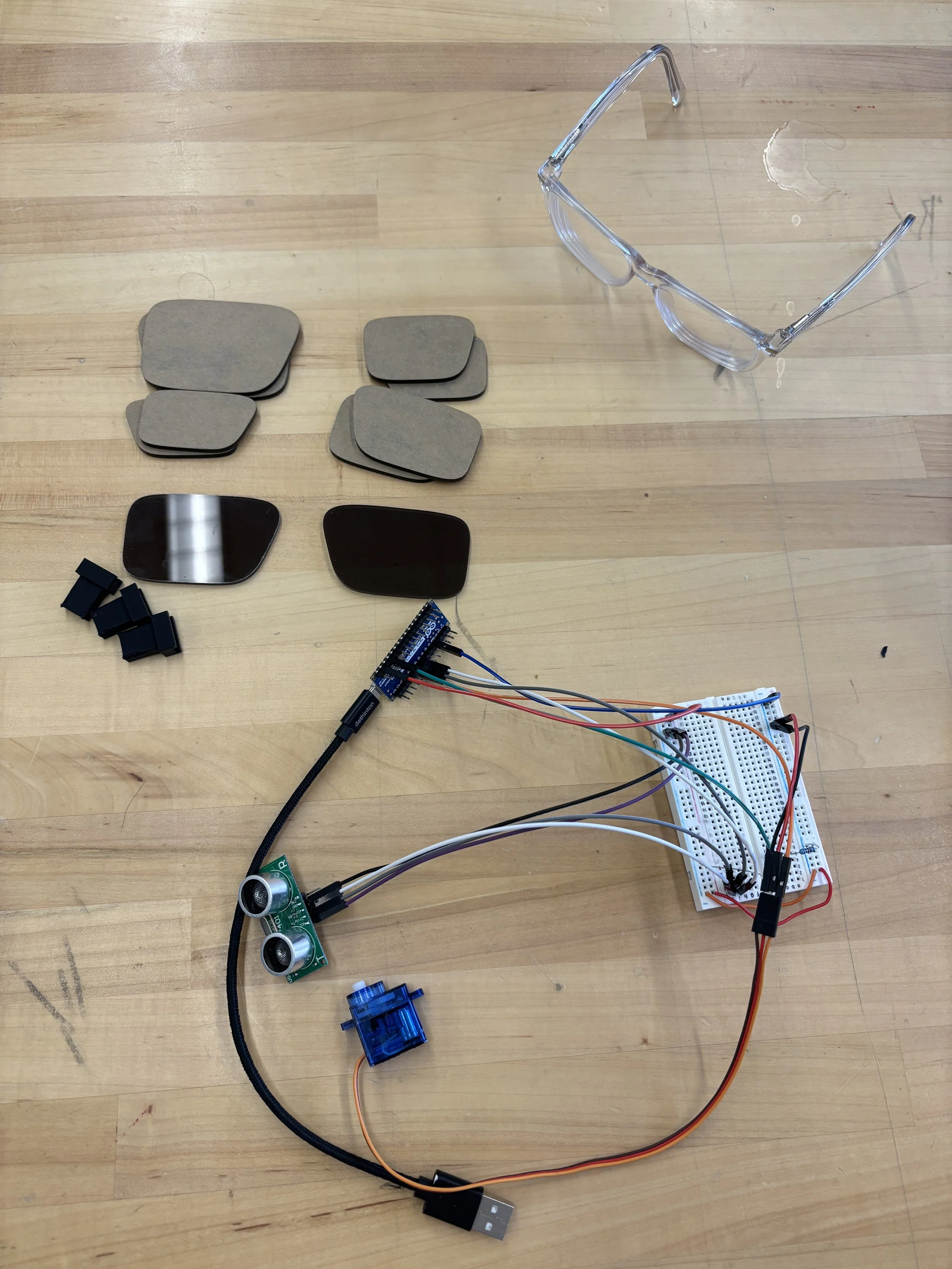

The glasses combine three input subsystems—distance sensing, light detection, and Bluetooth communication—coordinated by a central Arduino controller that drives two micro-servo motors attached to the shades.

Ultrasonic distance sensing: Detects gestures like hand waves to toggle the shades. Multiple readings are processed using signal debouncing and hysteresis buffering to prevent false triggers and noise.

Ambient light detection: Monitors surrounding brightness levels to automatically lower the shades in bright conditions and raise them when it gets darker.

Bluetooth Low Energy (BLE): Enables wireless control from a smartphone or computer, allowing users to override the automatic behavior at any time.

The control logic runs on a non-blocking architecture based on millis(), allowing the system to handle multiple asynchronous signals without lag or interference. Each input triggers timed lockouts that prevent conflicts—for instance, after a gesture is detected, the light sensor is temporarily disabled for a few seconds. This coordination ensures smooth transitions and a consistent user experience.

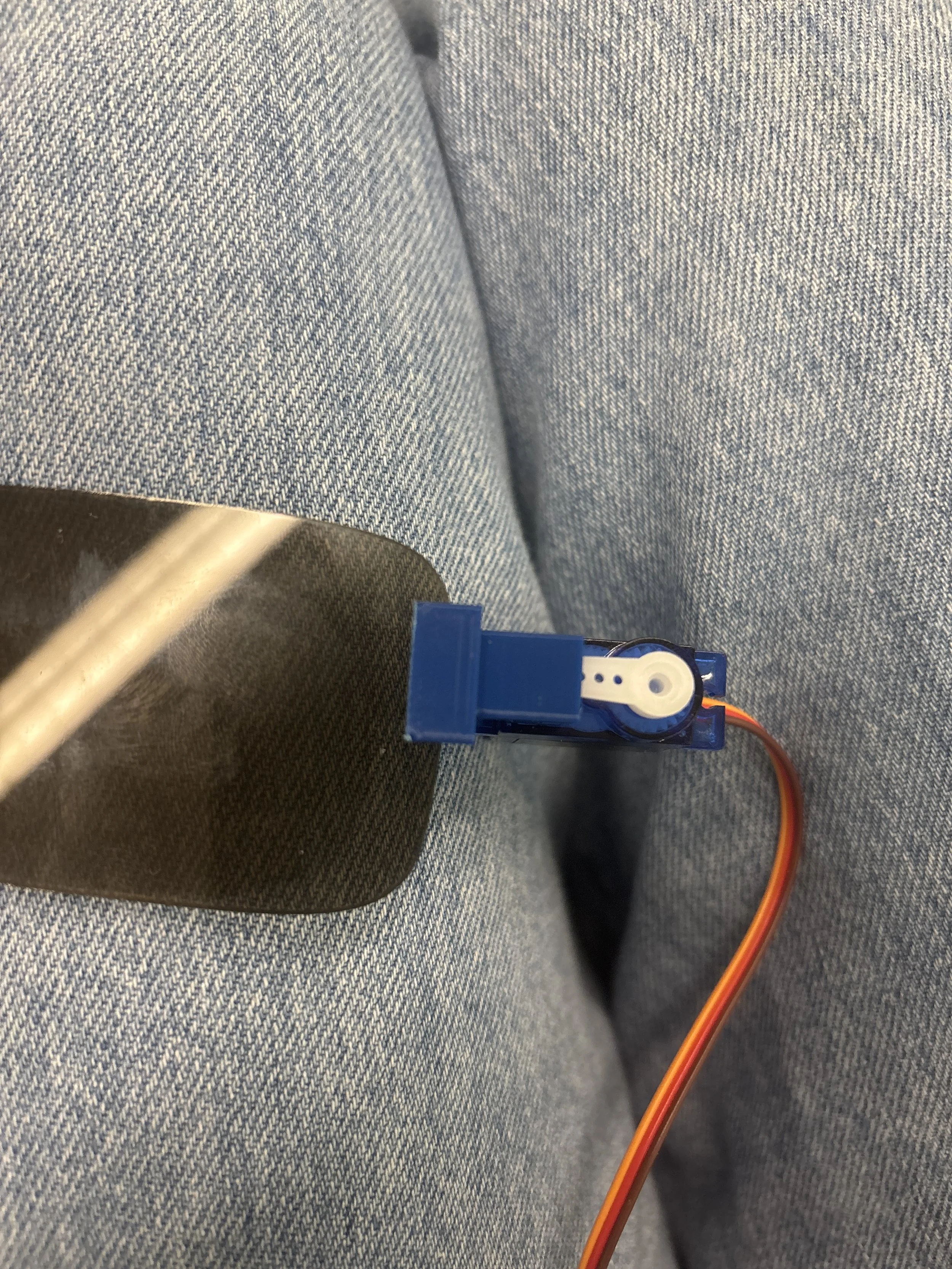

Two micro-servos mounted on the temple arms physically move the acrylic shades, while custom 3D-printed mounts connect each servo to the glasses frame. The design balances responsiveness with durability, ensuring that the motion feels both fluid and deliberate.

Technologies Used

Microcontroller: Arduino Nano

Sensors: HC-SR04 ultrasonic sensor and an LDR light sensor

Actuators: Dual SG90 micro servos for shade movement

Communication: Bluetooth Low Energy via the ArduinoBLE library

Software features:

Signal debouncing and hysteresis filtering

Non-blocking timing logic for concurrent sensor input

Dynamic prioritization and lockout timers for reliable coordination

Servo calibration for consistent motion and position feedback

Fabrication:

All mechanical parts were 3D-modeled in Fusion 360, printed using PLA filament, and integrated into the glasses frame. The actual shade panels were laser-cut from transparent black acrylic, chosen for its durability and sleek finish. We soldered and assembled all electronic components manually, designing compact wiring layouts to fit within the wearable form factor.